By Kenji Yamauchi (Analytics Team)

In this blog post, we introduce our new Blue/Green-based upgrade strategy for our Amazon EKS powered RevComm analytics platform along with an automation to streamline the process. This is the English version of a similar blog. Please check this post for the Japanese version.

RevComm’s Analytics Platform

Before we get into the details of the upgrade, a brief introduction to RevComm's analytics infrastructure is in order: although RevComm offers several products, such as MiiTel and MiiTel Meetings, they all share a single infrastructure for performing analytics, such as transcription. This analysis infrastructure does not run from a single application but consists of multiple applications divided into modules such as speech recognition and other analytics functions, which are currently hosted within a single EKS cluster.

AWS resources including the EKS cluster and middleware such as the AWS Load Balancer Controller are managed using Terraform. In addition, Kubernetes manifests for applications running in the cluster are managed in a separate repository, and deployed by referencing the main branch with Argo CD running in the cluster (Pull-type GitOps).

Upgrade strategy for EKS cluster

Since our adoption of EKS, the RevComm analysis infrastructure has been using the in-place method, i.e. upgrading the version of the existing cluster directly. As mentioned above, RevComm's analysis infrastructure uses IaC, so it can be done easily with only a few configuration changes, but there are some drawbacks.

More concretely, the in-place strategy conducts rolling updates for the resources including the node groups as described in the EKS Best Practices Guides. As a result, we faced potential downtime during cluster upgrades and couldn't rollback if issues arose. Moreover, the upgrade process took several hours to complete.

On the other hand, when going for a Blue/Green strategy upgrade, we prepare a new version cluster alongside the current cluster and let the traffic into the new cluster gradually. As we discard the older cluster only after confirming the behavior of the new cluster, we avoid the aforementioned problems. However, there’s overhead since we have to prepare a new cluster. Nevertheless, we opted for the Blue/Green strategy to improve the availability of the system.

Implementation

How to switch cluster

We implemented the Blue/Green strategy following the article Blue Green Migration of Amazon EKS Blueprints for Terraform because our architecture was similar. Assuming that the version of a current (blue) cluster is 1.25 and one of a new cluster (green) is 1.28, the flow of switching clusters is as follows:

- Create a new cluster and install middleware through Terraform

- Deploy the applications on the new cluster with Argo CD

- Change the manifests

- Use

external-dnsto distribute traffic via Ingress annotations with Route 53’s weighted routing.- Specify values of

set-identifierandaws-weight

- Specify values of

- Store new changes as a feature branch of the manifests repository and refer to them from the Argo CD of the new cluster. The Argo CD of the current cluster still refers to the main branch.

- Use

- Delete the current cluster after confirming the behavior of the new cluster

- Merge the feature branch into the main branch and make the Argo CD of the new cluster to refer to the main branch.

Automation

Although we can complete those steps as mentioned before by adjusting the variables of the Terraform scripts and modifying the parts of the manifests, the steps are relatively complicated compared to the in-place strategy. Therefore, we automated them with GitHub Actions to prevent dependence on individual members and operational errors. We automated the two processes: the creation of a new cluster and the deletion of the current cluster. Please look at the diagram below.

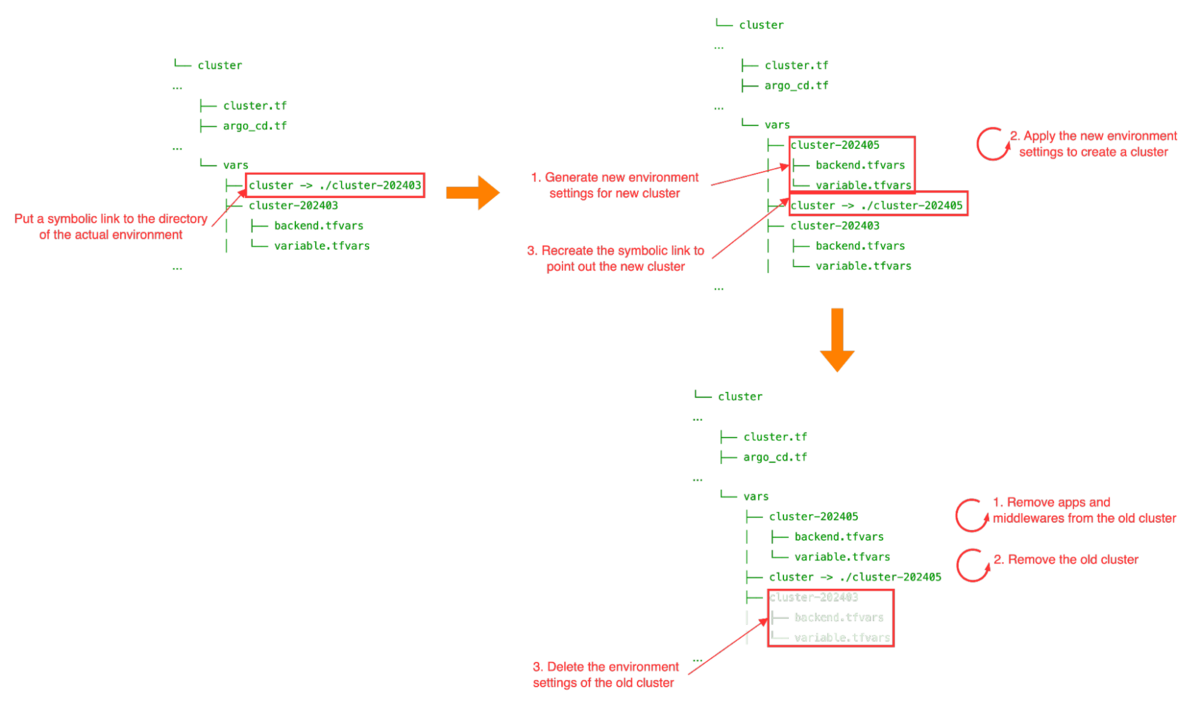

The Terraform code for the analysis infrastructure has directories for managing backends and variables for each environment (development, staging, and production) under the vars directory, hereafter simply referred to as environment settings. Each environment setting is managed with an identifier for each environment, such as cluster-YYYYYMM. In addition, the environment settings for the current cluster are pointed to by symbolic links.

When we create a new cluster with this structure, we need to generate new environment settings, create a new cluster by terraform apply, and recreate the symbolic link. On the other hand, when we remove the current cluster, we need to remove the middleware and the applications hosted in the current cluster, the current cluster and the environment settings for the current cluster.

To prevent operational errors that could occur during manual processes, we automated these steps using GitHub Actions. The Actions take identifiers and cluster versions as inputs and perform the entire process—creating, switching, and deleting clusters, as well as deploying applications to the new cluster—in a matter of minutes.

Conclusion

In this article, we introduced our migration from an in-place EKS upgrading strategy to Blue/Green.

Compared to the in-place method, we needed to automate and establish procedures to avoid increasing man-hours. Still, as we had the groundwork of IaC, we were able to introduce the Blue/Green method with maximum benefit. The analysis infrastructure had few stateful elements, and there were few things to consider on the application side, which also made it compatible.

Thank you for reading and have a happy upgrading time!