Intro

Hello, my name is Raman Yachi. I am an engineer here at RevComm working on the Accounts team. Our product suite at RevComm follows a microservices architecture and I would like to talk about how we automate testing our Account backend, which is a pivotal part of the backend as it deals predominantly with user authentication. Failures here could lead to users being unable to log into our services, which is something we want to avoid as much as possible.

Our first line of defense against that is ensuring all our features have corresponding unit-tests. For the backend we use Pytest as our testing framework and each commit merged to a PR triggers the whole battery of tests so that we can guarantee functionality on a commit-by-commit basis and know exactly where breaking changes were introduced.

Unit-tests do not convey the whole story so in addition we run system tests to ensure performance in real-world scenarios. This is done by running Postman tests (E2E Test) against our live server’s API endpoints and validating their responses.

Issue

Prior to having automated the tests our team used to run manual API tests from local machines using Postman to ensure that the newly deployed service was working as intended. The issue with that was the test suites, while version controlled, were not ensured to be uniform. So someone’s test could fail and another’s could succeed and the only way to tell is by lining up the backend and test versions. This made the testing process unreliable as it becomes hard to tell which machine’s result is correct at first glance.

Furthermore co-ordinating variables between the production, staging and development environments could become a hassle for people not used to the Postman product. So we sought for an alternative that served as a single source of truth regarding whether the changes shipped broke any endpoint.

From these key requirements we know that we need:

- A testing framework that tests the backend with correct (most recent) API structure (endpoint URL, body format etc.), and executes on non-local infrastructure so that results are available across the team.

- A simple enough interface that requires minimal configuration on the user end

What we did

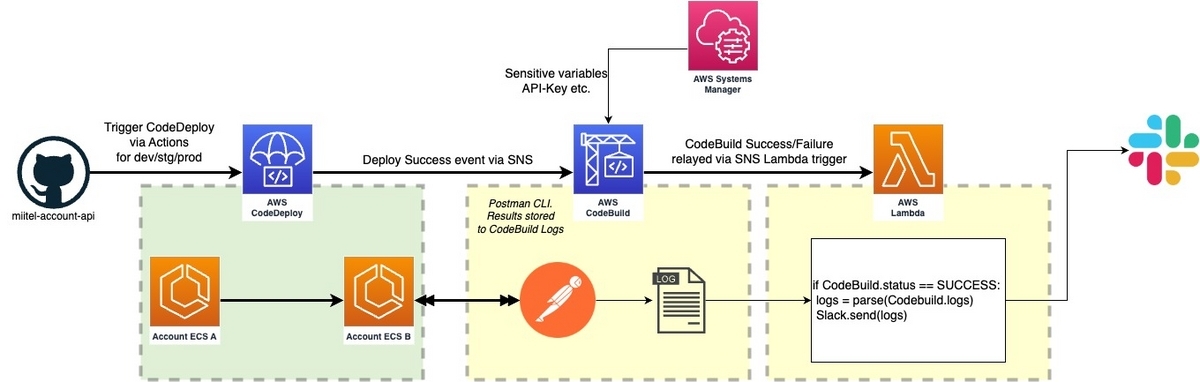

To accomplish this our first step was simply migrating the Postman test runs to a CodeBuild instance, which ensures that the platform is up-to-date and independent to our machines. Once the tests were set up we still needed a method to trigger them, along with a notification system. This was accomplished using the architecture below. To the left of the CodeBuild testing is our Trigger portion of the pipeline and to the right the Notification mechanism.

Every time we release to our environment a respective deployment is triggered in CodeDeploy via Github Actions. At the successful conclusion of this deployment we can assume that the new release is now available and we initiate our integration tests.

We do this in the next step by triggering a CodeBuild build. CodeBuild then tests our new backend instance by testing the API of the backend by mimicking user API calls (engaging with the user-facing endpoints). The tool we use for that is Newman, Postman’s CLI counterpart.

https://learning.postman.com/docs/collections/using-newman-cli/command-line-integration-with-newman/

This allows us to craft/debug test-cases in Postman and then have our CodeBuild instance run those tests.

These test-case results are uploaded to CodeBuild’s report. The conclusion of this CodeBuild run will then trigger a Lambda function that receives the CodeBuild Report. The Lambda will then parse the report and send a message to a dedicated Slack channel that informs everyone of the test’s conclusion and results .

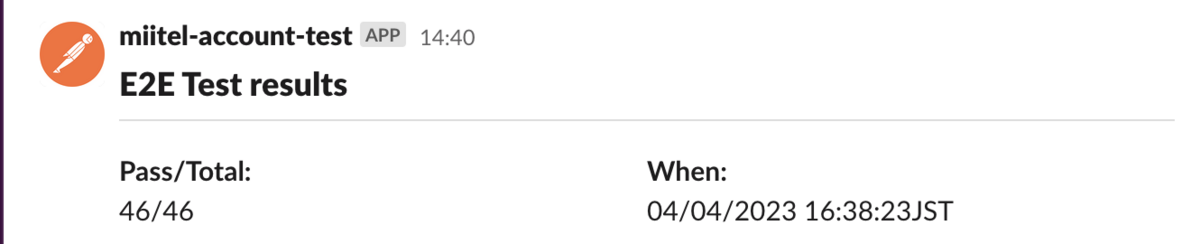

An example result from the E2E tests is shown below, where we get the pass/total count, and timestamp:

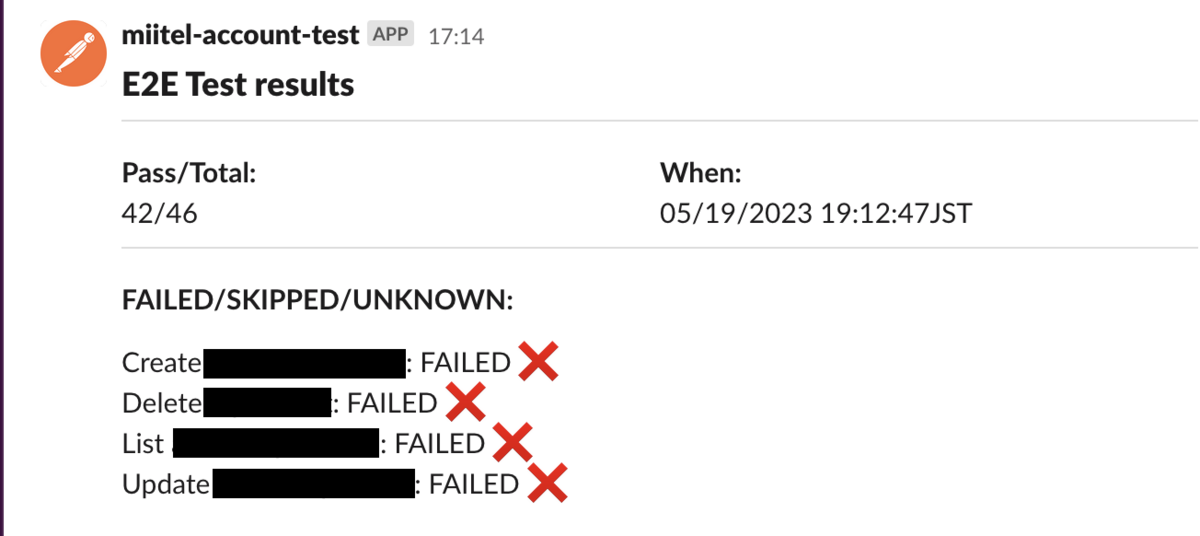

Should tests fail the team is notified by their name being included in the report:

With this we have gained the ability to confidently know whether our service is functional after every merge. And with the Slack integrations any failure can be converged upon much quicker. Thus fixes can be issued faster too.

The AWS infrastructure, along with the testing recipes are managed by us in a single repository. The test recipes are handled with Postman, while infra changes are affected via Terraform. This repo also serves as the instruction set for our CodeBuild testing instances. What this means is that our Postman test recipes are version controlled in the same repo, to ensure the tests are always up to date whenever a E2E test run is initiated. Thus the whole testing pipeline is managed in a self-contained manner and allows us to hermetically test our backend.

Conclusion

With the new pipeline we can test our backend in a CI/CD manner which ensures developer productivity does not take a hit every time testing is required and the tests conducted are reliable, the results readily available and also easily traced back to their respective changes.

Parting thoughts for this is that I really enjoyed this task as this was my first foray into Terraform and CI/CD and being tasked with the problem despite that was a blessing in disguise. I was given free reign to tackle the problem on my own, and whenever my lack of experience became apparent my colleague’s were able to provide advice and direction – and if you are someone who similarly enjoys working autonomously on interesting problems I believe RevComm would be a great place for you to contribute and grow!